Investigating a compiler bug

On rare occasions we find a bug in the compiler. exploring this one was instructive.

A user reported that the simple instruction...

print(Float(Int32(60000.00))

was printing 33003.00!

Confirmed this is not expected on a macOS playground, then dug into where this came from.

It's well documented how the S4A compiler produces AVR machine code... Swift code is compiled to bitcode, then an llvm AVR assembler compiles the bitcode for AVR, so one of these steps is at fault, right?

There's actually a hidden subtlety in the first step, swift compilation. Because most of the time when you write Swift code, without realising it you're actually using a swift library and non primitive types... the standard library! So in fact there's effectively hidden Swift code inside the above simple swift code.

While the micro swift standard library is not open source, it's very closely based on the common, open source swift library. From that we can see a constructor for Float from Int32 that's being called here and seems to be at fault. https://github.com/apple/swift/blob/main/stdlib/public/core/FloatingPointTypes.swift.gyb...

// Fast-path for conversion when the source is representable as int,

// falling back on the generic _convert operation otherwise.

@inlinable // FIXME(inline-always)

@inline(__always)

public init<Source: BinaryInteger>(_ value: Source) {

if value.bitWidth <= ${word_bits} {

if Source.isSigned {

let asInt = Int(truncatingIfNeeded: value)

_value = Builtin.sitofp_Int${word_bits}_FPIEEE${bits}(asInt._value)

} else {

let asUInt = UInt(truncatingIfNeeded: value)

_value = Builtin.uitofp_Int${word_bits}_FPIEEE${bits}(asUInt._value)

}

} else {

// TODO: we can do much better than the generic _convert here for Float

// and Double by pulling out the high-order 32/64b of the integer, ORing

// in a sticky bit, and then using the builtin.

self = ${Self}._convert(from: value).value

}

}

In our case it goes down the path

self = ${Self}._convert(from: value).value

and that function is in https://github.com/apple/swift/blob/main/stdlib/public/core/FloatingPoint.swift...

@inlinable

public // @testable

static func _convert<Source : BinaryInteger>(

from source: Source

) -> (value: Self, exact: Bool) {

// Useful constants:

let exponentBias = (1 as Self).exponentBitPattern

let significandMask = ((1 as RawSignificand) << Self.significandBitCount) &- 1

// Zero is really extra simple, and saves us from trying to normalize a

// value that cannot be normalized.

if _fastPath(source == 0) { return (0, true) }

// We now have a non-zero value; convert it to a strictly positive value

// by taking the magnitude.

let magnitude = source.magnitude

var exponent = magnitude._binaryLogarithm()

// If the exponent would be larger than the largest representable

// exponent, the result is just an infinity of the appropriate sign.

guard exponent <= Self.greatestFiniteMagnitude.exponent else {

return (Source.isSigned && source < 0 ? -.infinity : .infinity, false)

}

// If exponent <= significandBitCount, we don't need to round it to

// construct the significand; we just need to left-shift it into place;

// the result is always exact as we've accounted for exponent-too-large

// already and no rounding can occur.

if exponent <= Self.significandBitCount {

let shift = Self.significandBitCount &- exponent

let significand = RawSignificand(magnitude) &<< shift

let value = Self(

sign: Source.isSigned && source < 0 ? .minus : .plus,

exponentBitPattern: exponentBias + RawExponent(exponent),

significandBitPattern: significand

)

return (value, true)

}

// exponent > significandBitCount, so we need to do a rounding right

// shift, and adjust exponent if needed

let shift = exponent &- Self.significandBitCount

let halfway = (1 as Source.Magnitude) << (shift - 1)

let mask = 2 * halfway - 1

let fraction = magnitude & mask

var significand = RawSignificand(truncatingIfNeeded: magnitude >> shift) & significandMask

if fraction > halfway || (fraction == halfway && significand & 1 == 1) {

var carry = false

(significand, carry) = significand.addingReportingOverflow(1)

if carry || significand > significandMask {

exponent += 1

guard exponent <= Self.greatestFiniteMagnitude.exponent else {

return (Source.isSigned && source < 0 ? -.infinity : .infinity, false)

}

}

}

return (Self(

sign: Source.isSigned && source < 0 ? .minus : .plus,

exponentBitPattern: exponentBias + RawExponent(exponent),

significandBitPattern: significand

), fraction == 0)

}

...so all that code (and more) is getting added inline into our simple looking Swift before it's optimised and compiled to bitcode!

The way I took to get through this is to copy/paste all inlined functions into a new blank S4A file, then trim it down and use print statements to find where the calculation is going wrong. This worked.

And I finally got down to this simple function, which was producing the math error...

@inline(never)

func checks(magnitude: UInt32, shift: Int) {

let significand = magnitude &<< shift

print(shift)

print(significand)

}

...when compiled to bitcode (and run through llvm-dis to produce the human readable text form of bitcode), I get...

; Function Attrs: noinline

define hidden swiftcc void @"$s4main6checks9magnitude5shiftys6UInt32V_SitF"(i32 %0, i16 %1) local_unnamed_addr addrspace(1) #3 !dbg !94 {

call addrspace(1) void @llvm.dbg.value(metadata i32 %0, metadata !97, metadata !DIExpression()), !dbg !98

call addrspace(1) void @llvm.dbg.value(metadata i16 %1, metadata !99, metadata !DIExpression()), !dbg !100

%3 = and i16 %1, 31, !dbg !101

%4 = zext i16 %3 to i32, !dbg !101

%5 = shl i32 %0, %4, !dbg !101

call addrspace(1) void @llvm.dbg.value(metadata i32 %5, metadata !103, metadata !DIExpression()), !dbg !104

tail call swiftcc addrspace(1) void @"$s3AVR5print_10addNewlineySi_SbtF"(i16 %1, i1 true), !dbg !105

tail call swiftcc addrspace(1) void @"$s3AVR19printNumberInternal6number10addNewlineys6UInt32V_SbtF"(i32 %5, i1 true), !dbg !106

ret void, !dbg !111

}

...stripping out unimportant details like debug, attributes, address spaces...

define void @"$s4main6checks9magnitude5shiftys6UInt32V_SitF"(i32 %0, i16 %1) {

%3 = and i16 %1, 31

%4 = zext i16 %3 to i32

%5 = shl i32 %0, %4

call void @"$s3AVR5print_10addNewlineySi_SbtF"(i16 %1, i1 true)

call void @"$s3AVR19printNumberInternal6number10addNewlineys6UInt32V_SbtF"(i32 %5, i1 true)

ret void

}

The mangled names look weird but you can just use xcrun swift-demangle to see what they mean… $s4main6checks9magnitude5shiftys6UInt32V_SitF ---> main.checks(magnitude: Swift.UInt32, shift: Swift.Int) -> () … $s3AVR5print_10addNewlineySi_SbtF ---> AVR.print(_: Swift.Int, addNewline: Swift.Bool) -> () … $s3AVR19printNumberInternal6number10addNewlineys6UInt32V_SbtF ---> AVR.printNumberInternal(number: Swift.UInt32, addNewline: Swift.Bool) -> ()

So what the code should be doing is simple…

%3 = and i16 %1, 31

%4 = zext i16 %3 to i32

these do nothing much, taking the shift value (which is 8 in this case) and putting it into a 32 bit integer…

%5 = shl i32 %0, %4

this is the business… do a left shift of the significand, a 32 bit integer containing the value 60000 by the value in %4 which is a 32 bit integer containing the value 8. Because we’ve now got the bitcode down to the smallest possible test case that produces an error we can see what’s happening in the assembly language without huge pages of confusing extra things. The output of the above program is…

8

60159

so it is calculating 60000 << 8 to be 60159 which is clearly wrong. The value should be 15360000 according to my calculator (and according to a swift playground).

We have now got down to one line of bitcode that's seemingly creating faulty AVR machine code and we are getting pretty confident that the bug is not in the swift compiler (the above bitcode all looks fine and matches the logic of the Swift code once you've put in all the standard library code inline).

So it looks like the llvm AVR back end is maybe faulty in at least some cases with the SHL instruction on 32 bit integer operands.

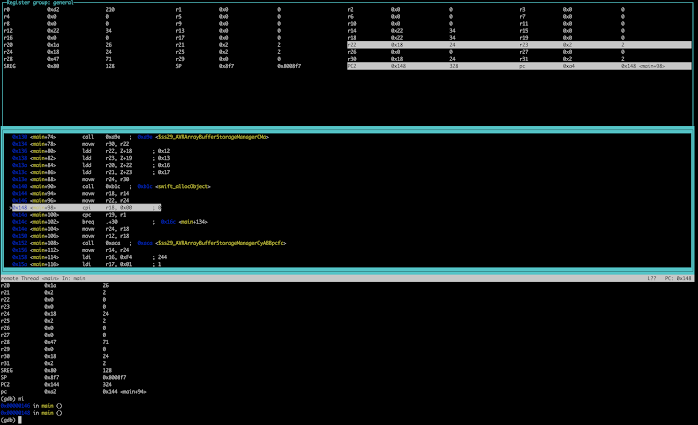

What is the assembly produced?

00000a54 <$s4main6checks9magnitude5shiftys6UInt32V_SitF>:

a54: cf 92 push r12

a56: df 92 push r13

a58: ef 92 push r14

a5a: ff 92 push r15

a5c: 0f 93 push r16

a5e: 1f 93 push r17

a60: 8a 01 movw r16, r20

a62: 7c 01 movw r14, r24

a64: 6b 01 movw r12, r22

a66: c8 01 movw r24, r16

a68: 61 e0 ldi r22, 0x01 ; 1

a6a: 0e 94 86 05 call 0xb0c ; 0xb0c <$s3AVR5print_10addNewlineySi_SbtF>

a6e: 0f 71 andi r16, 0x1F ; 31

a70: b6 01 movw r22, r12

a72: c7 01 movw r24, r14

a74: 40 2f mov r20, r16

a76: 0e 94 6c 09 call 0x12d8 ; 0x12d8 <__ashlsi3>

a7a: 41 e0 ldi r20, 0x01 ; 1

a7c: 0e 94 47 05 call 0xa8e ; 0xa8e <$s3AVR19printNumberInternal6number10addNewlineys6UInt32V_SbtF>

a80: 1f 91 pop r17

a82: 0f 91 pop r16

a84: ff 90 pop r15

a86: ef 90 pop r14

a88: df 90 pop r13

a8a: cf 90 pop r12

a8c: 08 95 ret

...ignoring the function prolog/epilog and the debug print statements, we can home in on the part that does the SHL operator...

a6e: 0f 71 andi r16, 0x1F ; 31

a70: b6 01 movw r22, r12

a72: c7 01 movw r24, r14

a74: 40 2f mov r20, r16

a76: 0e 94 6c 09 call 0x12d8 ; 0x12d8 <__ashlsi3>

...this looks wrong. The gcc runtime routine being called, _ashlsi3 is the wrong left shift routine. See the docs: https://gcc.gnu.org/onlinedocs/gccint/Integer-library-routines.html.

Given we are passing a 32 bit integer, it should probably be _ashldi3.

Looking at the machine code linked in from libgcc.a for that routine, which is being called, shows it's definitely wrong...

000012d8 <__ashlsi3>:

12d8: 02 c0 rjmp .+4 ; 0x12de <__ashlsi3+0x6>

12da: 88 0f add r24, r24

12dc: 99 1f adc r25, r25

12de: 6a 95 dec r22

12e0: e2 f7 brpl .-8 ; 0x12da <__ashlsi3+0x2>

12e2: 08 95 ret

This takes a 16 bit register pair in r24/r25 and left shifts it by the 8 bit value in r22.

In our case, we are shifting the magnitude of the uint32, which is exactly equal to its value, 60,000 (0xEA60).

There's still a bit of a mystery because the top 16 bits are empty so right now that reads to me as 0<<96 ... which is of course just 0. I was expecting 60159

But I don't think that's enough of a discrepancy to assume I'm wrong, this looks like the right approach.

Next up, writing a unit test and fixing the bug!

Comments

Post a Comment